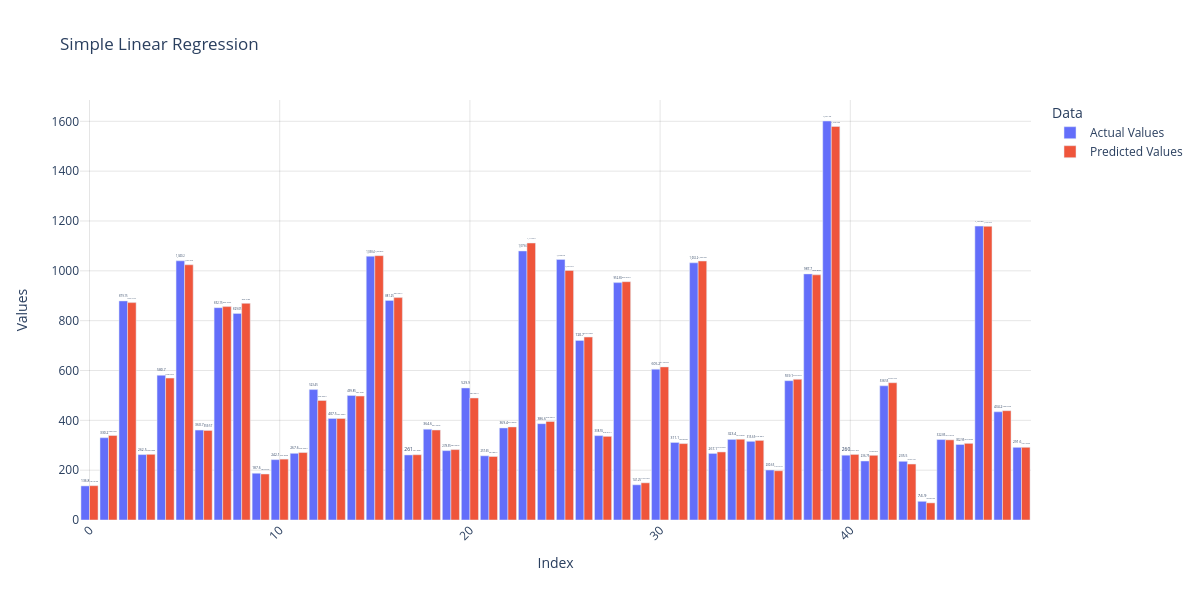

import plotly.express as px

import plotly.io as pio

# Create the bar plot

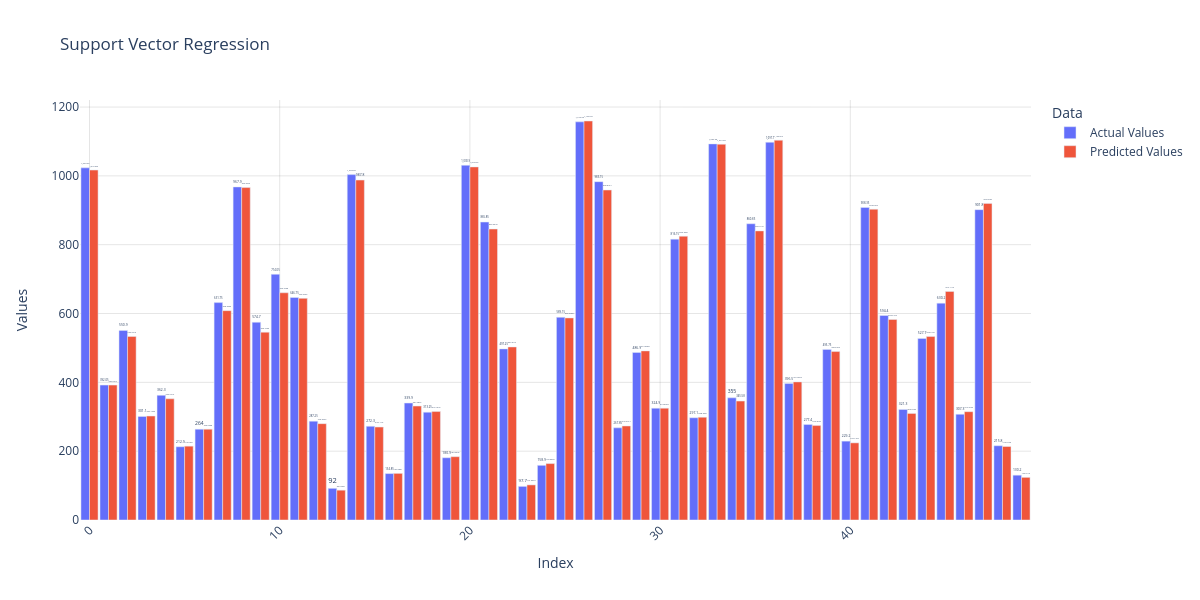

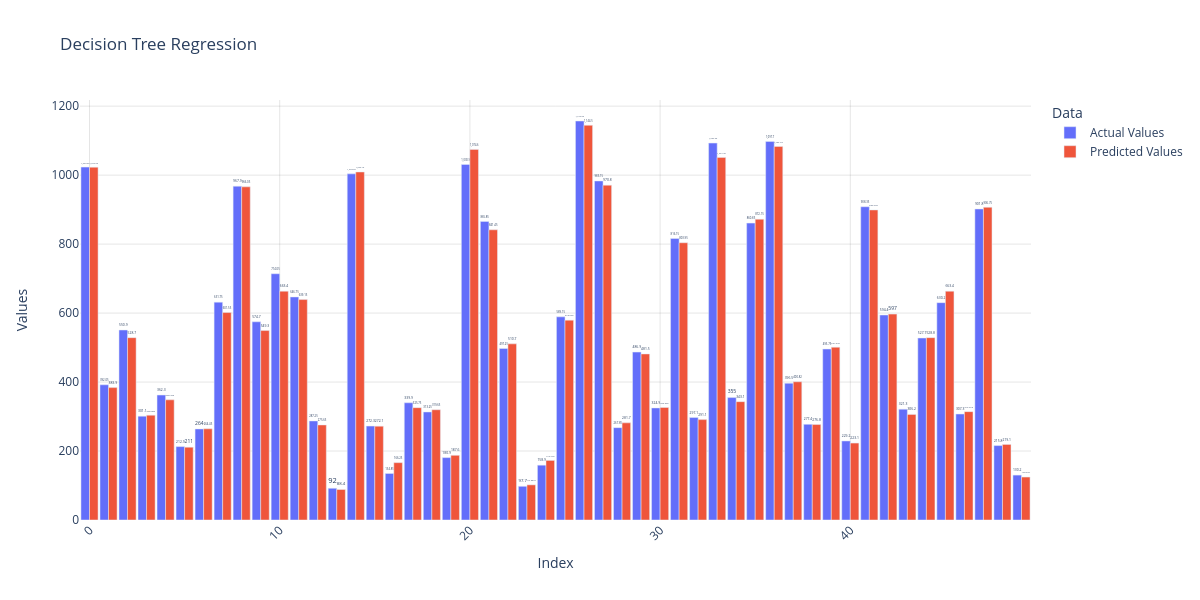

fig = px.bar(df1.head(50), title='Simple Linear Regression', barmode='group', color_discrete_sequence=px.colors.qualitative.Plotly)

# Customize the layout

fig.update_layout(

xaxis_title='Index',

yaxis_title='Values',

legend_title='Data',

width=1200,

height=600,

xaxis_tickangle=-45, # Rotate x-axis labels for better readability

showlegend=True, # Show legend

font=dict(size=12), # Set font size

plot_bgcolor='rgba(0,0,0,0)', # Set plot background color

paper_bgcolor='rgba(0,0,0,0)', # Set paper background color

bargap=0.1, # Set gap between bars

xaxis=dict(showgrid=True, gridcolor='rgba(0,0,0,0.1)'), # Show x-axis gridlines

yaxis=dict(showgrid=True, gridcolor='rgba(0,0,0,0.1)') # Show y-axis gridlines

)

# Add data labels to the bars

fig.update_traces(texttemplate='%{y}', textposition='outside')

# Display the plot

fig.show()

# Save the plot as a PNG file

# pio.write_image(fig, 'bar_plot.png')